Pragmatic Probabilistic Programming: Parameter Adaptation in PyMC3

Colin Carroll, Freebird, Inc.

Cambridge, MA

Slides are online!

Code is also available here.

Many thanks to the PyMC team and contributors, and also to autograd + jax developers.

PyMC3 Syntax

import pymc3 as pm

with my_model: # defined earlier

trace = pm.sample() # ✨✨magic✨✨

PyMC3 and Stan

"There's an old saying... 'Python is the second-best language for everything'" - @callahad #PyCon2018

— Jake VanderPlas (@jakevdp) May 11, 2018

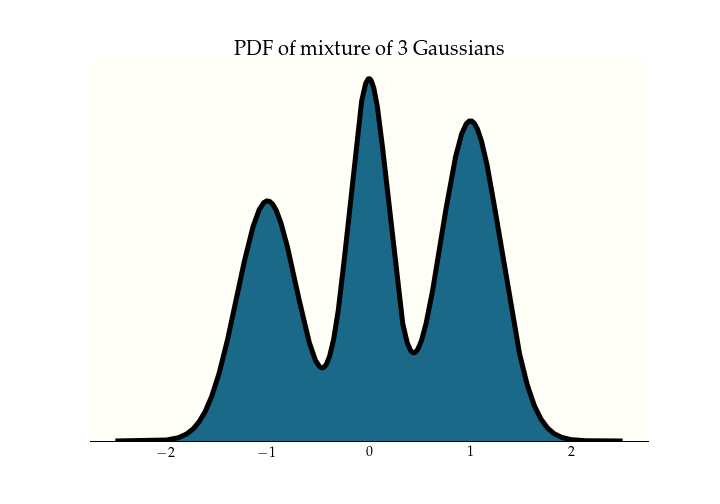

What is tuning?

$$ \mathbb{E}[f] = \int f~d\pi \approx \frac{1}{n}\sum_n f(x_j),~~x_j\sim \pi $$

Metropolis-Hastings Adaptation

$$A(x_t) = \min\left\{1, \frac{\pi(x_t)}{\pi(x_{t - 1})}\right\}$$

Baby Steps

Giant Steps

Optimal Steps

Effective sample size

$$\hat{\mu} = \frac{1}{n}\sum_{j=1}^n x_j$$

$$\operatorname{Var}(\hat{\mu}) = \frac{\sigma^2}{n}$$

Effective sample size

$$\hat{\mu} = \frac{1}{n}\sum_{j=1}^n x_j$$

$$\operatorname{Var}(\hat{\mu}) = \frac{\sigma^2}{n_{eff}}$$

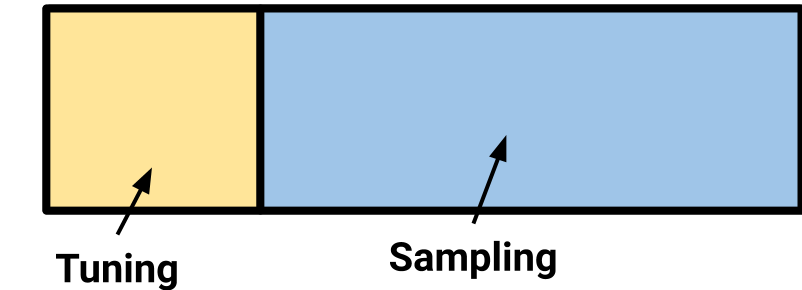

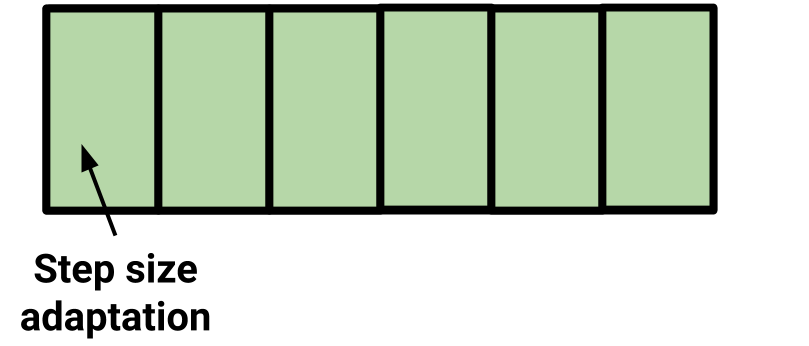

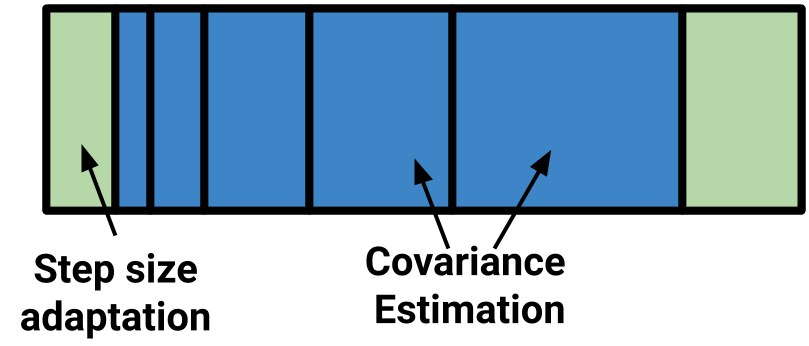

Windowed adaptation

Tuning in PyMC3

def tune(scale, acc_rate):

"""Tunes the scaling parameter for the proposal

distribution according to the acceptance rate over the

last tune_interval:

Rate Variance adaptation

---- -------------------

<0.001 x 0.1

<0.05 x 0.5

<0.2 x 0.9

>0.5 x 1.1

>0.75 x 2

>0.95 x 10

"""

Tuning in PyMC3

def tune(scale, acc_rate):

if acc_rate < 0.001:

# reduce by 90 percent

scale *=0.1

elif acc_rate < 0.05:

# reduce by 50 percent

...

elif acc_rate > 0.5:

# increase by ten percent

scale *= 1.1

return scale

Hamiltonian Monte Carlo

$$\frac{d \mathbf{q}}{dt} = \mathbf{p}, ~~ \frac{d \mathbf{p}}{dt} = - \frac{\partial V}{\partial \mathbf{q}}$$

Hamiltonian Monte Carlo

Hamiltonian Monte Carlo

Hamiltonian Monte Carlo

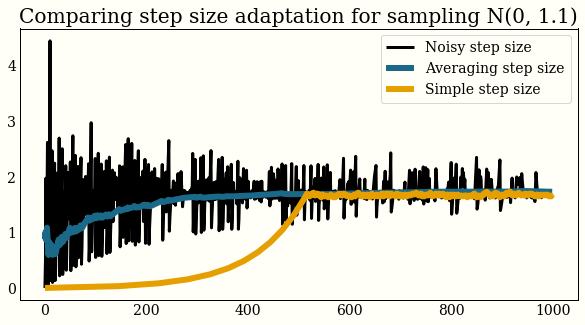

Step Size Adapt

def dual_averaging_adapt(self, p_accept):

self.error_sum += self.target_accept - p_accept

log_step = (self.mu -

t ** -0.5 * self.error_sum / self.gamma)

eta = self.t ** -self.kappa

self.log_averaged_step *= (1 - eta)

self.log_averaged_step += eta * log_step

self.t += 1

return np.exp(log_step), np.exp(self.log_averaged_step)

Step Size Adapt

Covariance Estimation

class _WeightedVariance:

def add_sample(self, x):

self.w_sum += 1

old_diff = x - self.mean

self.mean[:] += old_diff / self.w_sum

new_diff = x - self.mean

self.raw_var[:] += old_diff * new_diff

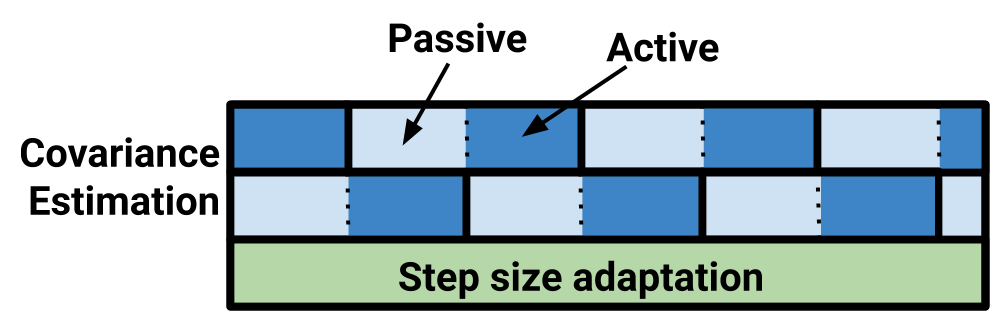

Covariance Estimation

class QuadPotentialDiagAdapt:

def update(self, sample):

self._foreground_var.add_sample(sample)

self._background_var.add_sample(sample)

self._update_from_weightvar(self._foreground_var)

n_samples = self._n_samples

if n_samples > 0 and n_samples % self.window == 0:

self._foreground_var = self._background_var

self._background_var = _WeightedVariance()

self._n_samples += 1

PyMC3 Tuning Routine

Stan Warmup

Takeaways

- Automate what you can

- Consider effective sample size

- Windowed adaptation